What do you know about virtualization?

Wednesday, December 24, 2008 Category : VMware 3

Musings on areas of technology that effect the Enterprise. Focus on Cloud, Virtualisation, Storage and Data Center.

Home > December 2008

Wednesday, December 24, 2008 Category : VMware 3

Tuesday, December 23, 2008 Category : cloud, VMware 5

James Urquhart over at cnet in the Wisdom of Clouds blog has an interesting post about "A maturity model for cloud computing".

The post is worth a quick read, it lists five high level steps, to quote:

At a very high level, each step of the model breaks down like this:

Consolidation is achieved as data centers discover ways to reduce redundancy and wasted space and equipment by measured planning of both architecture (including facilities allocation and design) and process.

Abstraction occurs when data centers decouple the workloads and payloads of their data center infrastructure from the physical infrastructure itself, and manage to the abstraction instead of the infrastructure.

Automation comes into play when data centers systematically remove manual labor requirements for run time operation of the data center.

Utility is the stage at which data centers introduce the concepts of self-service and metering.

Market is achieved when utilities can be brought together over over the Internet to create an open competitive marketplace for IT capabilities (an "Inter-cloud", so to speak).

Sunday, December 21, 2008 Category : VMware 2

Unless you have spent the last 48 hours entrapped in a shopping center doing last minute Christmas shopping you will have seen that the new name for the next version of VMware has been officially leaked. vSphere it is.

I say officially leaked because no one was game to spill the beans until someone with enough authority gave the okay. The name was mentioned at a user group meeting last week and wanting to report on it Jason Boche got authority from VMware marketing to say it in a wider forum. You do wonder if this is was a plan by VMware? Seems strange to have the big name change for your key product launched via a user group and the blog sphere. Are they just following the hype that Veeam are getting with the release of their new free product, I doubt it? Did they feel that it was going to get out anyway so might as well be part of it, maybe. Lets see how long it takes for the official press release to appear.

At the end of the day its just a name (sorry Marketing). This new version has been called many things, starting out with K/L. At VMworld you would hear lots of VMware employees use the phrase "K/L" and the Beta forum is labeled "K/L". Of course most people have been calling it VI4. It will be interesting to see how this name integrates into all the other recent name chnanges, VDC-OS et al.

Things could be worse, can you imagine what it was like for all of those die hard Citrix fans. One day your company buys this thing called Xen and then after a few months they rename just about every product in your sweet by putting Xen in the front of it. Now that has to put the whole vSphere name into perspective. It could have been EMCompute or something. Although I think Sean Clark gets the prize for Atmos-vSphere because EMC have Atmos, their cloud optimized storage. Atmos-vSphere, it has a certain ring to it don't you think.

Rodos

Friday, December 19, 2008 Category : VMware 1

HA has always been an interest of mine, its such a cool and effective feature of VMware ESX. Whilst it is so simple and effective the understanding of how it actually works is often a black art, essentially because VMware have left much of it undocumented. Don't start me on slot calculation.

So this week another edge case for HA came to my attention. Over on the VMTN forum there has been a discussion about the redundancy level of blade chassis. You can read all of the gory details (debate) there but Virtek highlighted an interesting scenario. Here is what Virtek had to say.

I have also seen customers with 2 Blade Chassis in a C7000 6 Blades in each. An firmware issue affected all switch modules simultaneously instantly isolating all blades in the same chassis. Because they were the first 6 blades built it took down all 5 Primary HA agents. The VMs powered down and never powered back up. Because of this I recommend using two chassis and limiting cluster size to 8 nodes to ensure that the 5 primary nodes will never all reside on the same chassis.I have never thought of that before, but the resolution can be better, this is not a reason to limit your cluster size.

My point is that blades are a good solution but require special planning and configuration to do right.

/opt/vmware/aam/bin/ftcli -domain vmware -connect YOURESXHOST -port 8042 -timeout 60 -cmd "listnodes"If you want some more details on how HA works Duncan Epping has a great summary over at YellowBricks.

Node Type State

----------------------- ------------ --------------

esx1 Primary Agent Running

esx2 Primary Agent Running

esx3 Secondary Agent Running

esx4 Primary Agent Running

esx5 Primary Agent Running

esx6 Secondary Agent Running

esx7 Primary Agent Running

Thursday, December 18, 2008 Category : VMware 2

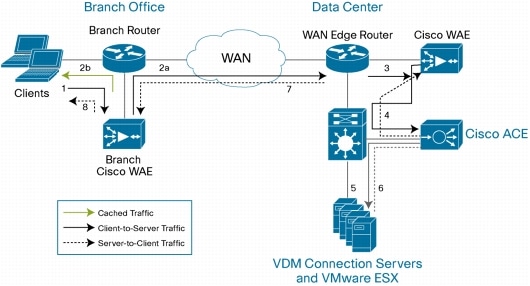

On Tuesday I posted about VDI and WAAS, as part of an ongoing discussion on WAN optimisation for VDI.

Today I finally got some deeper detail from Cisco about VDI and WAAS, shout out to Brad for putting me onto it.

The document is the "Cisco Application Networking Services for VMware Virtual Desktop Infrastructure Deployment Guide" and it is well worth your time to have a flick through.

If you are looking at putting in WAN acceleration for VDI/RDP then you should read through this document, no matter what vendor you are looking at, to give you some good detail.

For example it details traffic flows, configuration details and performance results.

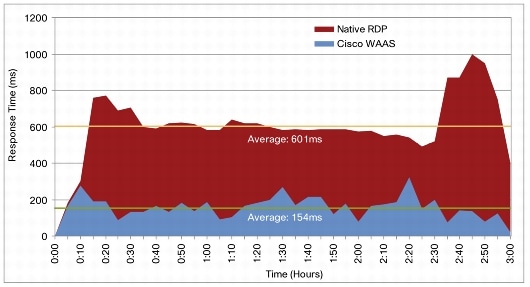

One of the interesting results is the response time. Remember as discussed its the response time that is critical in VDI/RDP over a WAN, rather than the bandwidth. In a test over a 1.5Mbps line with a 100ms RTT, the results were:

The response time measured at the remote branch office during a test of 15 simultaneous VMware VDI sessions shows a 4-times improvement. Cisco WAAS acceleration results in an average response time of 154 ms, and native VMware VDI achieves an average response time of 601 ms (Figure 18).

New documents added to the "VMware Technical Resource documents listing" on VMTN.

At version 17 there are now 198 documents listed with abstracts for searching.

VMware View Reference Architecture Kit

by VMware on 12/09/2008

Wednesday, December 17, 2008 Category : VMware 1

Have you wondered why its the end of the year and you are just exhausted? Maybe its because for the last 12 months you have been trying to keep up with the frantic pace that is the virtualisation world form a VMware perspective. Here is a brief recap of what you had to digest and manage this past year.

Jan

Feb

Have you ever been confused by all of those maximums and descriptions in the Fibre Channel section of the Configurations Maximums reference document? Which ones are for a host and which are for a cluster? Well read on to find out.

Here is the table that lists the limits for Fibre Channel.

Whilst it’s simple it can be confusing, why do some have “per server” and others don’t. When it says "Number of paths to a LUN" is that across the cluster or for the host? Are you sure?

Well here is some clarification.

LUNs per server 256

On your ESX host you can only zone 256 LUNs to that host. That’s a big number.

LUN size 2TB

Your LUN can’t be bigger than 2TB, but you can use extents to combine multiple LUNs to make a larger datastore. Most SANs will not create a LUN larger than 2TB either.

Number of paths to a LUN 32

This is the confusing one, because it does not have “per server” and then the seed of doubt is sown. To confirm, this is a server metric, not a cluster one. Also note that only active paths are counted. So if you have an active/passive SAN that’s one path, even if you have redundant HBAs. If it’s an Active/Active SAN you can have four Paths (one for each combination of HBA and SP). In some high end storage arrays, like a HDS USP/VM you can configure way more than 32 paths to a single LUN, now that’s some scalability and redundancy.

Number of total paths on a server 1024

So if you have two HBAs on an Active/Active SAN that’s a maximum of 256 LUNs on the host. Hey, go figure, that matches the LUNs per server limit!

LUNs concurrently opened by all virtual machines 256

Again, you can only be talking to 256 LUNs from the one server.

LUN ID 255

No, its not a misprint, LUN counting starts a 0. This is effectively cluster wide, as for multipathing to work properly, each LUN must present the same LUN ID number to all ESX Server hosts.

So what do you really need to be worried about for the maximums? 256 LUNs is your limit per cluster and host, and you can have up to four active paths for each (but ESX will only use one path at a time for a particular LUN). Of course, as Edward recently pointed out the real limit may be your SAN, as some have limitations on how many hosts per LUN.

What other things should you be looking out for then? Here is a quick dump of some of the considerations in regards to FC and pathing.

“Because VMware View Manager supports a variety of back ends, such as VMware View virtual desktops, Microsoft Terminal Services, Blade PCs, and ordinary PCs, it is recommended that a robust profile management solution be implemented. Profile management solutions such as RTO Virtual Profiles or Appsense can work in place of, or in conjunction with, a VMware View Composer user data disk. Profile management helps to ensure that personal settings will always be available to users who are entitled to multiple back-end resources, regardless of the system they are accessing. Profile management solutions also help to reduce logon and logoff times and to ensure profile integrity.”

“1,000 users can easily be maintained by this architecture [with fast application response time] using the provided server, network, storage resources, and configuration”.

Tuesday, December 16, 2008 Category : VMware 1

Further to my previous post on Is network acceleration useful for VDI?. Cisco have a post up on their Data Center Networks blog about VDI. In the white paper linked off this post it states

The joint Cisco and VMware solution optimizes VMware VDI delivery and allows customers to achieve the benefits of VMware VDI by providing the following features:Just like other vendors some bold claims. Some of these are simply distractions, improving transport of virtual image backup across a WAN link for DR purposes is not really a VDI issue, thats stretching the friendship and moving into marketing spin. However I have seen some of the internal Cisco analysis on the acceleration of RDP/VDI and it does look to stack up. Hopefully in the new year I will have completed some testing.

- Near-LAN performance for virtual desktops over the WAN, improving performance by 70 percent

- Increased scalability of the number of VMware VDI clients, increasing the number of clients supported by 2 to 4 times, and massive scalability of VMware VDI and VMware VDM data center infrastructure

- 60 to 70 percent reduction in WAN bandwidth requirements

- Optimization of printing over the WAN by 70 percent, with the option of a local print server hosted on the Cisco WAAS appliance

- Improved business continuity by accelerating virtual image backup by up to 50 times and reducing bandwidth by more than 90 percent

Monday, December 15, 2008 Category : cloud, VMware 1

What would a Enterprise, in particular one using virtualisation, take away from a Cloud computing event? What if the speakers were from Cisco, Yahoo, Google, Microsoft, Baker & McKenzie and Deloitte Digital? Well two weeks ago I went to such an event, took lots of notes and engaged in some interesting discussions. Here is what some of what occurred and my updated thoughts on the Cloud space.

The event was held by Key Forums and was help in Sydney, Australia on the 3rd of December. Billed as a one day, comprehensive conference, the expectation was

This conference will kick start your Cloud strategy and will get you up to speed on what the main players, critics and users think about the potential of Cloud Computing. It will give you the opportunity to discuss your issues surrounding Cloud Computing with your peers and our expert panel of speakers.The speakers were local heavy hitters and an impressive list. Some of their presentations are available online and worth a look. The Google one was originally public but has been pulled at Google’s request. Of course the presentation from the legal firm was never public, go figure.

SaaS is going to be a massive market. However in the main this adoption is going to be more in growing new services that are tactical rather than the main game of organizations core business processes. Yes Cisco may move to Salesforce.com but for most enterprises core elements are going to stay in house. It’s the new systems and non core that are going to see the most growth in SaaS.If you are in Australia and interested in cloud computing like I am. I would recommend you put March 25th, 2009 in your calendar. IDC are holding a Cloud Computing Conference on this day in Sydney. See, aren’t you glad that you read all the way to the end of this post! You can register for free as an early bird attendee.

When that project team needs a new intranet site, rather than waiting two weeks for IT to not deliver, they will put their credit card number into a website and be up and running in 10 minutes.

The challenge for VMware here as well as the opportunity is to capture some of this space with Virtual Appliances. If a mature set of Virtual Appliances can be available through the market place; if these can be downloaded from within the management environment painlessly; and if they can not only describe their service levels, backup and disaster recovery requirements (vApps) but also implement them automatically (AppSpeed, Data Recovery Appliance, SRM) VMware could be onto a good slice of this pie. Put your credit card in, download the service and run it locally in your own security model, owning the data. You can even still have the provider maintain the application as part of a maintenance agreement. Most of the benefits of SaaS without many of the current concerns and limits. VMware need to lead by example here.

PaaS is the challenging space. Microsoft is going to push .Net real hard, and that means Azure. The challenge for all of the players is to support open languages such as Hadoop and Ruby on Rails. This strategy is good for customers in the enterprise. If they develop their own applications in these open languages they can execute them internally on their own clouds. After all a key element of VDC-OS is running the workloads of today and tomorrow. With VDC-OS you can run mixes of work loads and change them on the fly, one day 10% of your cluster might be running Hadoop nodes and tomorrow, to scale up for a specific project work load, it may be 30%. Even better because you have written to an open standard you can go to a provider in the open market to buy capacity for short or medium term, if you really need to scale up. Even if you run that application out in the external cloud, if there is a problem, or for testing, or for DR, you can always run it in house on your own cloud if needed. VMware need to work hard here to not let the ISV market get away from them. Maybe that’s why Paul Maritz can’t stop saying Ruby on Rails.

IaaS is VMware’s sweet spot. The enterprises know they need to move to the benefits that cloud and utility based computing can bring. They want to run like Google and Yahoo! The challenge is how to do this in today’s environment and that’s where VDC-OS, vCloud and vApp come into play. Running internal clouds, federating them, running the workloads of today and tomorrow, its not just a dream, its like Christmas, you know its coming and its not far away. The problem for VMware is to not been seen like Azure and be another closed shop. That’s why it’s good to see vApp being based on OVF. We know VMware is the best system for running workloads, if we can keep the portability of workloads it means the best technology wins. If it all goes closed, the best marketing company wins, and that is not VMware. Also VMware need to keep tight (like they already are) with complementing technologies. Networking is going to play a huge role in enablement of the cloud, from things like split VLANs to WAN acceleration.

Thursday, December 11, 2008 Category : VMware 7

Over at vinternals Stu asks if linked clones are the panacea that a lot of people are claiming about the storage problem with VDI? I say yes, however we are moving from designing for capacity to designing for performance, and VMware have given us some good tools to manage it. Let me explain a bit further.

Stu essentially raises two issues.

First, delta disks grow more than you think. Stu considers that growth is going to be a lot more than people expect, citing that NTFS typically writes to zero'd blocks before deleted ones and there is lots of activity on the system disk, even if you have done a reasonable job at locking it down.

Second SCSI reservations. People are paranoid about SCSI reservations and avoid snapshot longevity as much as possible. With a datastore just full of delta disks that continually grow, are we setting up ourselves for an "epic fail"?

These are good questions. I think what this highlights is that the with Composer the focus for storage for VDI has shifted from an issue of capacity management to performance management. Where before we were concerned with how to deliver a couple of TB of data now we are concerned with how to deliver a few hundred GB of data at a suitable rate.

In regards to the delta disk growth issue. Yes, these disks are going to grow, however this is why we have the automated desktop refresh to take the machine back to the clean delta disk. The refresh can be performed on demand, as a timed event or when the delta disk reaches a certain size. What this means it that the problem can be easily managed and designed for. We can plan for storage over commit and set the pools up to manage themselves.

To me the big storage problem we had was preparing for the worse case scenario. Every desktop would consume either 10G or 20G even though most only consumed much less than 10GB. Why? Just in case! Just in case one or two machines do lots of activity and because we had NO easy means of resizing them we also had to be conservative about the starting point. With Composer we can start with a 10GB image but only allocate used space. If we install new applications and decide we really do need the capacity to grow to 12GB, we can create a new master and perform a recomposition of the machines. Now we are no long building for worse case but managing for used space only. This is a significant shift.

So happens today there was a blog posting about Project Minty Fresh. This installation has a problem with maintaining the integrity of their desktops. As a result they are putting a policy in place to refresh the OS every 5 days. This will not only maintain their SOE integrity but also keep their storage overcommit it check.

In regards to SCSI reservations. I do believe that the delta disks do still grow at 16MB and not some larger size. So when the delta disks are growing there will be reservations, and you will have many on the one datastore. Is this a problem? I think not.

In the VMware world we have always been concerned about SCSI reservations because of server work loads. For server work loads we want to ensure fast and more importantly predictable performance. If we have lots of snapshots that SQL database system which usually runs fine now starts to behave a little differently. Predictability or consistency in performance is sometimes more important than the actual speed. My estimation is that desktop workloads are going to be quiet different. In our favor we have concurrency and users. All those users and going to have a lower concurrency of activity, given the right balance we should have a manageable amount of SCSI reservations, if not we rebalance our datastores, same space, just more LUNs. Also unlike servers, will users be able to perceive any SCSI reservation hits as they go about their activity. Given the nature of users work profile and that any large IOs should be redirected not into the OS disk but into their network shares or user drives the problem may not be as relevant as we may expect.

What Stu did not mention and we do need to be careful of because it can be the elephant in the room is IO storms. This is where we really do have some potential risk. If a particular activity causes a high currency of IO activity things could get very interesting.

Lastly, as Stu points out, statelessness is the goal for VDI deployments. Using application virtualisation, locking down the OS to a suitable level and redirecting file activity to appropriate user or networked storage is going to make a big impact on the IO profile. These are activities we want to undertake in any event, so the effort has multiple benefits.

I too believe you need to try this out in your environment, not just for the storage requirements, but also for the CPU, user experience, device capabilities and operational changes. VDI has come a long way with this release and I do strongly believe it will enable impactful storage savings.

What I really want is the offline feature to become supported rather than just being experimental. Plus I want it to support the Composer based pools. There is no reason why it can't and until then, there is still some way to go before we can address the breadth of use cases. However there are plenty of use cases now, which form the bulk, to sink our teeth into.

Rodos

Two new documents added to the "VMware Technical Resource documents listing" on VMTN.

At version 16 there are now 197 documents listed with abstracts for searching.

Added Storage Design Options for VMware Virtual Desktop Infrastructure

Added Using IP Multicast with VMware ESX 3.5

http://communities.vmware.com/docs/DOC-2590

Rodos

VMware have released a new searchable HCL system that makes it much easier to check for compatibility. What does it look like and how do you use it? Read on.

Here is the URL

http://www.vmware.com/resources/compatibility/search.php

and the following image is an example search.

What do we have here? Lets say you have a BOM for a system, it includes a new card you are not familiar with, a NC360T. Enter that into the keyword search on the IOs tab. Great news, its supported in just about all versions, any they are all listed in front of you, could not be easier!

The page comes back with a number of components in the results. The first is a categorization based on partners. Lets say you type in something generic, this lets you quickly filter down to a particular vendor or subset, excellent feature.

For the results many of the details are hyperlinks. I have shown an exploded view of what it looks like when you click on a element. The opening page shows the specific details of that item.

This is a great new feature and is going to make our job so much easier. Do you self a favor and go and have a play with it, then create a bookmark!

Rodos

Wednesday, December 10, 2008 Category : VMware 2

Many of the WAN acceleration vendors (Cisco, Riverbed, Expand, Packeteer, Cisco WANscaler, Exinda) are sprouting some amazing statistics for improvement of VDI performance over a WAN. However are these claims realistic? How does one navigate the VDI and WAN acceleration space?

Here is a claim from one vendor.

Expand’s VDI solution can can provide acceleration by an average of 300% with peaks of up to 1000% for virtual desktop user traffic.Is this realistic? Sounds like a lot of sales and marketing to me. I remember reading a white paper from one vendor sprouting their lead in the acceleration of VDI, which consisted of the argument that by reducing the bandwidth of the other protocols over the link VDI would magically get faster. Whilst true, if that’s all there is it’s not really an improvement on RDP is it? I am sure this was Riverbed but I can’t find the paper and its not on their website anymore. Instead on the page about virtualisation they talk about ACE in the desktop space, good grief, get with the program, no mention of VDI at all.

Wednesday, December 03, 2008 Category : VMware 1

Do you think that VMware have too many locations of important or relevant technical materials? Its starting to feel there are a lot of places where some good content has the potential to be isolated or fragmented.

Here are some of the places that I know of just off the top of my head which contains content.

Tuesday, December 02, 2008 Category : VMware 3

Can I turn 16TB of storage for 1000 VDI users into 619GB, let me show you how it’s actually done. The release today of VMware View Manager 3 brings to market the long anticipated thin provisioning of storage for virtual desktops. Previewed in 2007 as SVI (Scalable Virtual Images) what does this now released View Composer linked clone technology look like under the hood? How much storage will it actually use?

Here is the diagram presented on page 94 of the View Manager Administration Guide (http://www.vmware.com/pdf/viewmanager3_admin_guide.pdf).

This diagram as presented is a conceptual view of the storage. The important logical elements to note here are

VMware admins are always looking for quick reference to whitepapers and best practices. How do you do that?

Well there are some great sites which list links such as VMware-land. However the most popular document in VMTN (for non desktop products) is the "VMware Technical Resource Documents Listing" at http://communities.vmware.com/docs/DOC-2590

In a single page you will find the title, abstract and link to the PDF for every VMware published technical note. There are currently 195 documents listed.

The source for the listing is the VMware technical resources document list, however this has no usable search function, hence why this alternate listing was originally created. The excellent thing about the document list page is within seconds you can quick search with your browser for any keyword. Go on give it a try, jump over and search for a keyword that interests you. You may be surprised to find a paper that you never knew existed.

In the 11 months since I created this page it has had over 10,000 hits. This is dwarfed by some of the Fusion documents, one of which is over 33,000. VMware, its probably time to improved the search facility of the technical resource list. It would be great to be able to search within the documents, until then, at least we have a usable alternative.

Rodos

Powered by Blogger | Theme mxs | Converted by LiteThemes.com