In the tradition of social media we bring you the first ever (and possibly last) vForum booth awards. Success is based on a top secret criteria of awarding points in several random and sarcastic categories, as well as a little honest opinion.

Rodney Haywood and Alastair Cooke (@DemitasseNZ /www.demitasse.co.nz ) have dedicated minutes of their time to bringing you the best and worst of the booths, saving your the arduous journey through the crowds.

In no particular order, our awardees are:

Dedication to Booth Duty Award

Not a booth award, this is a personal award for the person who has shown outstanding effort above and beyond the call of duty.

David Caddick of Quest Software wins this award for still coming to, and standing, at the Quest booth all day, after requiring four stitches in his leg this morning. David’s enthusiasm for an early jog lead him to a lacerating encounter with the back stairs.

Well done David for not giving up and being there.

The Is There Anybody Home Award

This award recognises the booth that is there but fails to deliver a visible presence of life. Is there a positive message from having a booth with nothing to deliver your message?

Charles Sturt university wins this award for the apparent absence of life or anything informative (apart from a mobile Esky). The presence of cold beer in said Esky would have removed this booth from eligibility for this award, alas it was a case of a pub with no beer.

Thank you, come again.

The Puritan’s Booth Babe Award.

This award is inspired by Thomas Dureya’s annual effort to decrease the amount of clothing worn by vForum Booth Babes. Our puritan family values cannot condone the use of the scantily clad female form in a male dominated event as a marketing tool, hence our award goes to the Booth Babes presenting the height of puritan values.

The award goes to the VCE booth where the Booth Babes were covered from ankle to neck, along with modest head coverings. This is an excellent representation of Slip, Slop, Slap that would survive even a summers day on Bondi Beach.

We are very pleased to see a number of women on booths with excellent technical knowledge, not simply Booth Babes.

Show Me The Hardware Award

This award is to recognise the hardware vendor who has failed to show their product. Attendees are all interested in viewing and discussing your wares, which is hard if there is only a brochure.

The award goes to Dell, one of the largest server and storage hardware vendors, who only had a few laptops on their stand showing powerpoint. We were not the only critics to notice the lack of tin.

Maybe it’s all in the cloud.

Bravery Award

This award goes to a vendor that has gone the furthest and taken the risks. The unkind could call them cowboys, but we call them courageous.

The award goes to the brave boys at CoRAID, a new entrant into the storage market in Australia. With little local sales presence and only imminent VMware HCL support they nonetheless braved the big money vendors and brought actual hardware with blinking lights. Great to see them giving it a go in this crowded space.

Bigger and better next year.

Interactive Playground Award

This award is for the booth where the geeks got to play. vForum is for engaging and learning, this award celebrates booths that do this well.

The award goes to Cisco whose booth had a structured schedule of open briefings on their products, with excellent giveaways. There were a number of blades to touch and look at their insides, the hardware wasn’t just there it was there to be investigated. There were numerous technical people who sought questions to answer.

Geeks delivering to Geeks.

Buffet Award

This award recognises the booth with the broadest range of products and solutions visible. There needs to be something for everyone and the whole family should go home having had their fill.

The award goes to VMware who, despite being their event, showed an enormous range of products at a detailed level, every product was actually there to be touched and used. More than a dozen products were identifiable from distance and there were experts on them all.

No one trick Pony.

Most Appealing Booth Award

This award recognises the booth that stands out and draws you to it, with so many booths it becomes a blur. Something different must greet the eye.

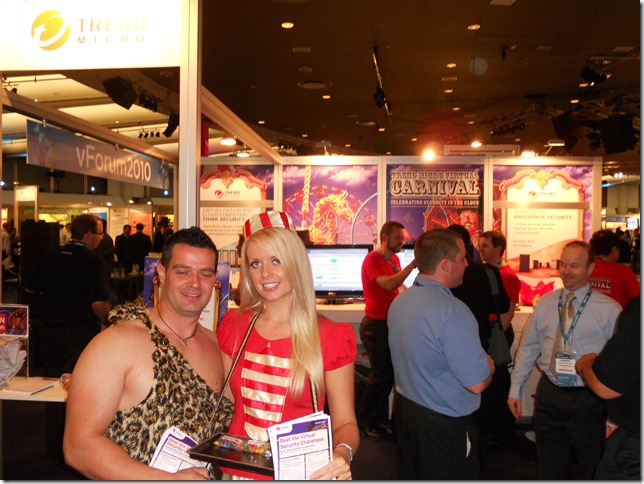

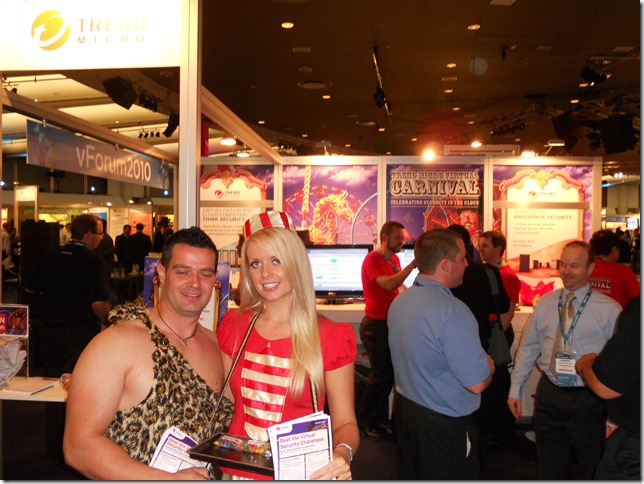

The award goes to Trend Micro for their appealing Carnival theme, from a distance you could see it wasn’t your ordinary booth. With the space limitations and sundry restrictions placed by event organisers it takes effort to stand out.

Who says conferences are all a circus?

Thanks to all the exhibitors who make the event so valuable. Congratulations to the winners. All those that didn’t make this years list should be planning for next year’s awards.

Rodos and Alastair

We are looking for 20 people attending from Australia who are willing to donate their unused VMworld 2010 bag to the

We are looking for 20 people attending from Australia who are willing to donate their unused VMworld 2010 bag to the